ChatGPT is a great storyteller. It makes stuff up. And it sounds authoritative in doing so.

I’ve been working on updating my website and social media profiles for the past few weeks. I decided to use AI tools, including ChatGPT to help me edit my content.

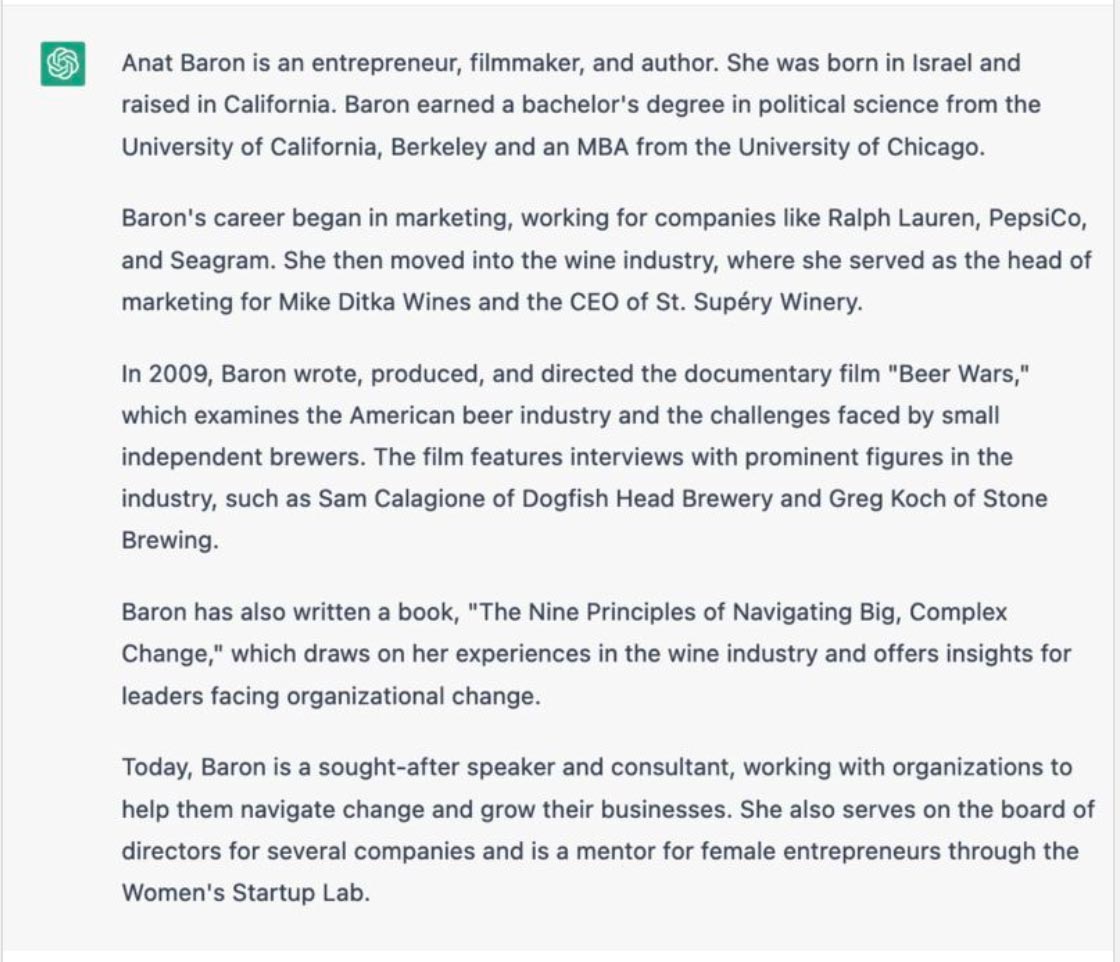

Today, I decided to ask ChatGPT to tell me about me. As you can see, it told a great story. Except most of it isn’t true. It’s pure fiction. The only thing that is true is the fact that I made “Beer Wars.” Where I grew up, went to school, worked – all false. I never wrote a book (although it does sound like something I’d want to read). I’m not a mentor.

So, what does this mean? Are ChatGPT and AI chatbots doomed because they hallucinate? Absolutely not. We are at the very beginning of mainstream AI adoption. These LLMs are still a work in progress. ChatGPT3.5 is a baby, and we need to be patient as it grows up and matures. That doesn’t mean that it will always be right. But it does mean that there’s still work to be done so that we can trust what it tells us to be true.

In the meantime, let’s double check what it tells us, especially about ourselves.